How to create music visuals for your live performance?

Some live performance references

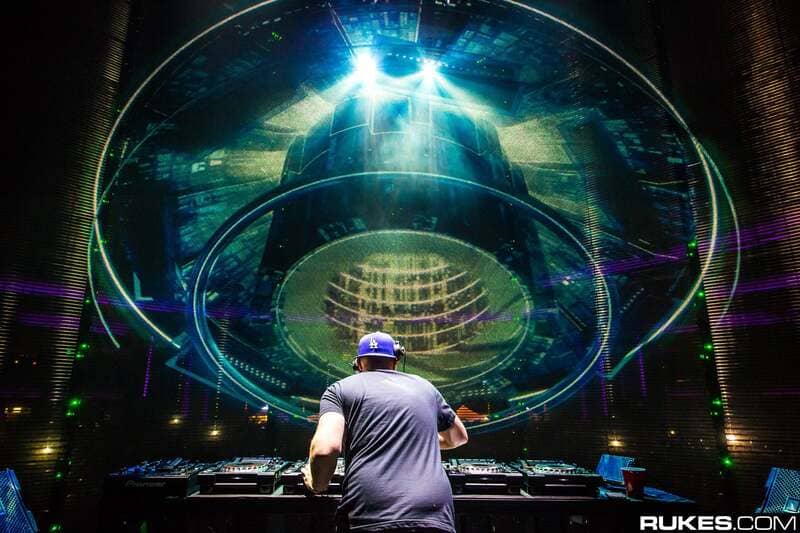

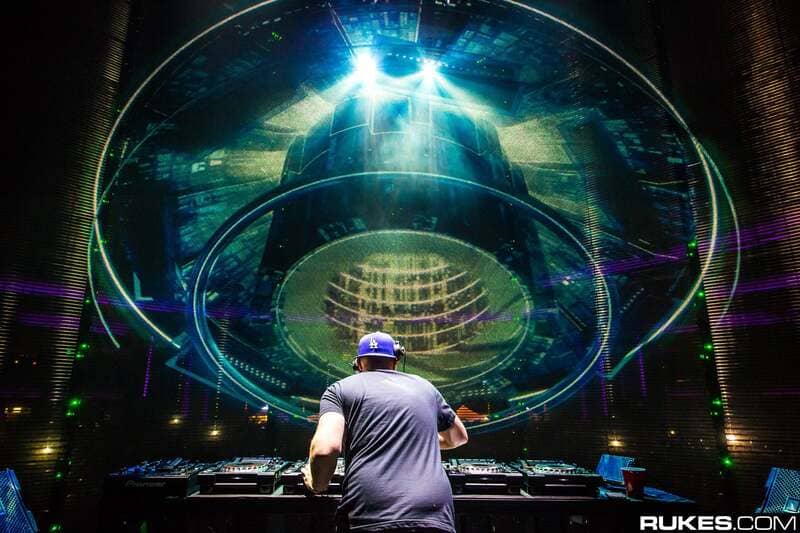

If you’re a fan of electronic music, you’ve probably already noticed that more and more artists and festivals are now focusing on stage decoration. It usually consists of fixed set elements, sometimes mixed with video projection and LED screens. Events like Tomorrowland or Dour Festival are very good examples. As for the artists, we can mention, amongst the most famous, names like Amon Tobin, Aphex Twin or Eric Prydz. They were pioneers in the use of music responsive visuals on a large scale stage.

However, the implementation of such installations requires long design times and the collaboration of a dedicated creative team. Indeed, the high resolution of the images to be manipulated and their ability to react to the music are complex problems to solve. To guarantee a perfect synchronization between sound and images during a live performance, latency times must be as short as possible. In addition, it is often necessary to use several networked computers and to synchronize them with each other. In addition to creative skills, there is a real technical expertise. All the processes to be mastered can be grouped under the term of VJ technologies and can be broken down into techniques such as computer graphics, computer programming, audio and video signal processing, live recording…

In this article, the goal is to demonstrate that creating music responsive visuals for small to medium scale stage performances is actually not as out of reach as one might think. With accessible software and affordable hardware, it is quite possible to build a live audiovisual performance without dedicating weeks of work to it. To illustrate this point, artists Control Random, Neurotypique, Marin Scart and Jean-Michel Light shared the conception stages of their latest performance in a limestone quarry.

Focus points for live visual effects: FPS and latency.

When creating music responsive visuals for a live performance, the two focus points are the fluidity of the visual animations and the latency between music and video.

It is absolutely necessary that the images are generated at a rate of at least 24 frames per second. This data is called the framerate and is expressed in FPS (frames per second). If you are below this value, for example at 12 frames per second, all animations will appear jerky. In the case of fast animations, it is often necessary to aim for a value above 24 FPS: 30 or even 60 FPS.

For example, in video games with very fast animations, gamers prefer framerate to resolution so as not to miss any action: better experience at 60 FPS in Full HD than at 30 FPS in 4K. Thus, there is a trade-off between better resolution or better FPS.

The more the computer is used for resource-intensive processing, such as visual effects or simultaneous playback of several HD videos, the more the FPS number will tend to drop. It is generally possible to display the FPS number through the settings of your graphics card or those of the software used. It is then up to you to find the best balance between the complexity of the visuals and the FPS limit below which you should not drop during your live performance.

As for latency, it is the delay between an occurrence in the music and its repercussion in images. For example, for each “kick” in the music you want to associate a visual effect of audio-reactive distortion. As a general rule we can say that two events will appear instantaneously linked to humans if the delay between them is less than 100 ms. There is no absolute rule concerning a value to be respected, but a delay greater than 100 ms will be considered as perceptible. The lower the FPS, the greater the latency in your music responsive visual effects.

In the case of a concert or DJ set, when the music is played live and not pre-recorded, it will always be dominant in relation to the images. It is not possible to slow it down to coincide with the visual animations. It is therefore the images that have to adapt so as not to appear out of sync. Either you reduce the activated effects so as not to slow down the computer, or plan to use a more powerful model.

A common technique used by many video jockeys (VJs) is to divide the system into two parts: pre-rendered video clips are played, mixed together, and then combined with visual effects reactive or not to the music that are applied live. It is then necessary that all the video clips used are at the same FPS so that the VJ can adjust their speed according to the music played.

An example of a live performance using music reactive visuals

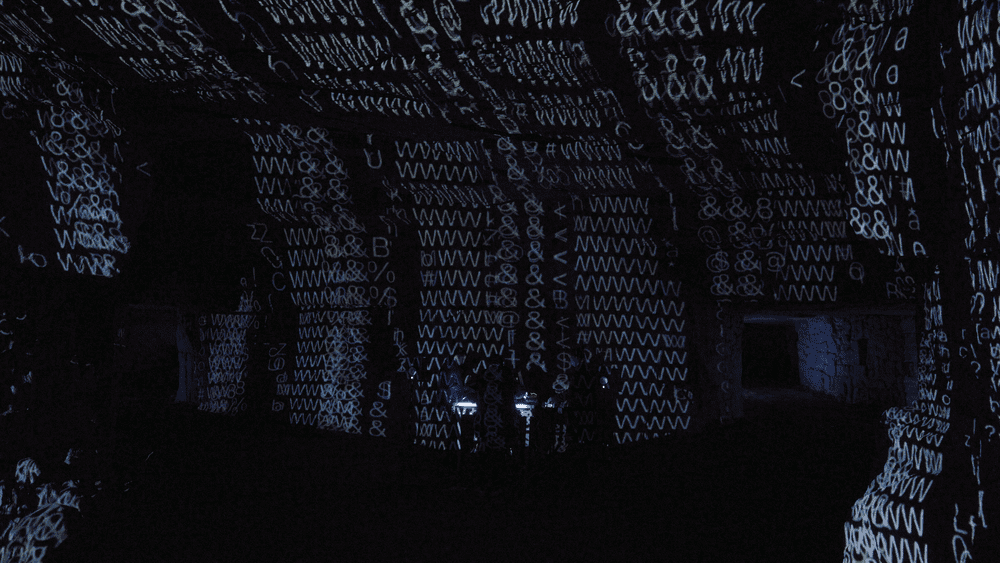

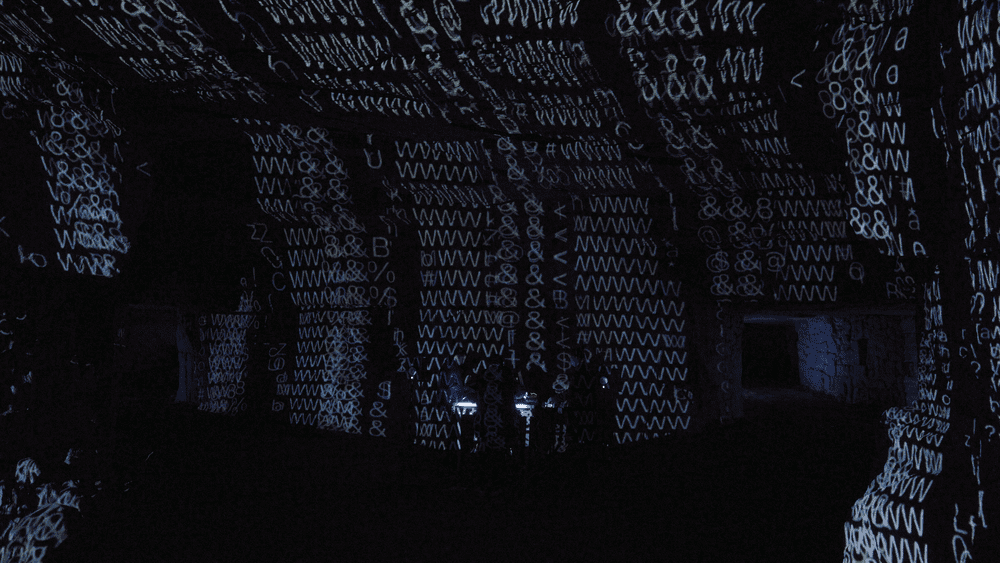

The Stone Mine Mapping performance is designed to explore the interactions between sound and image and to question the place of the spectator. It is a real-time audiovisual performance in which music and image are created live.

Control Random, Maison Sagan and Marin Scart were moved by the idea of a realization that could not be repeated exactly twice. Their collective wish was to create a multidisciplinary project that went beyond the experience and expertise of each artist. Becoming aware of being surrounded by sedimentary rock, they wanted to question the past, present and future of this material, the different states it had gone through and project themselves onto its future. In this 5-part journey, music reactive visuals are linked to the sound using audio and CV information, driving generative patches in image creation softwares.

The artists of this live performance

The music is created live by Control Random using a Eurorack modular synthesizer system. His compositions, mostly performed live, can be found on his Instagram and Bandcamp profiles.

Marin Scart and Neurotypique from Maison Sagan have created an image generation system composed of an analog part based on modular synthesizers and a computer part with TouchDesigner and HeavyM softwares.

Finally, Jean-Michel Light added DMX controlled lights to better appreciate the space of the quarry.

In practice: the solutions used for visual effects of the live performance

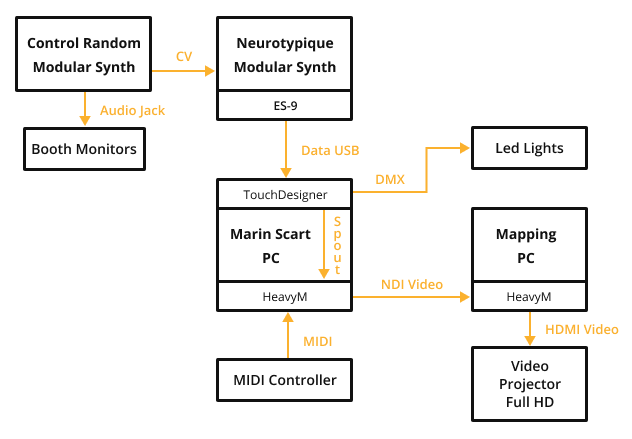

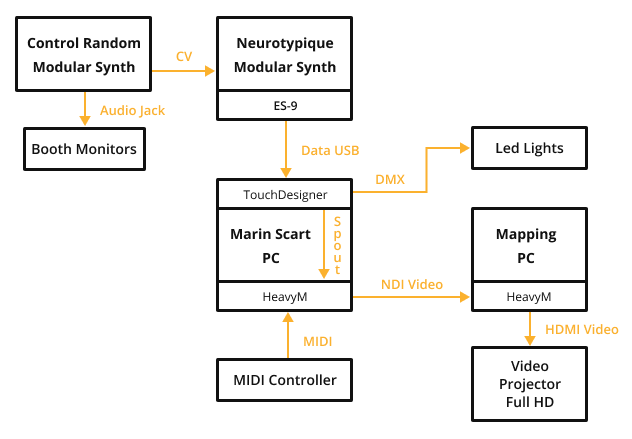

To create visuals in rhythm with music, Neurotypique and Marin Scart interconnected different elements of the system. Control Random’s modular synthesizers are electro-analog instruments that generate sound waves from oscillators. The various signals generated are sent to Neurotypic’s modules, which then perform filtering and turn-on/turn-off operations. Then, analog signals are converted into digital signals to be interpreted by Marin’s computer. TouchDesigner can generate visual effects that are reactive to music, which are then modified with shaders applied by HeavyM and eventually controlled live with a MIDI instrument. Finally, the video signal is sent to a second computer that will perform the mapping, i.e. the positioning of the visual effects on the walls of the room.

Live performance system overview

The sound source: modular synthesizers

It is an assembly of independent modules where each one fulfills a fundamental function: oscillator, filter, effect,… The combination possibilities are infinite and allow artists to produce music that is unique to them. Control Random and Neurotypique have used the Eurorack system which is a standard format for modular synthesizers. All the available modules are listed on the Modular Grid website. There are more than 11,000 of them!

Of course, it is not necessary to use modular synthesizers to create visual effects in sync with the music. You can use any source: a DJ mixer, a computer jack, or even a microphone.

Computers

In order to keep the framerate close to 60 FPS to avoid any latency problem during the live performance, the artists chose to use two computers. This configuration allows to better distribute the processing and to divide the workload between the machines. Other advantages of this solution are that it allows to work in pairs without interfering with each other and identifying problems more easily during the performance setup.

The visual effects computer

The computer to which the audio information is sent is a tower PC with Windows 10, an Intel i7 processor, an Nvidia Geforce GTX 1650 graphics card and 16GB of RAM.

Touchdesigner and HeavyM are running on this computer.

The image distortion computer

The video mapping computer, which is used to fit the images to the space, is a laptop with Windows 10, an Intel i5 processor, an Nvidia Geforce GTX 1050 graphics card and 8GB of RAM.

Only HeavyM is running on this computer.

For more tips on what to look for in a video mapping computer, check out the dedicated computer article.

The projector

The projector used is an Optoma WU630 6000 lumens Full HD with a short focal length optic of ratio 0.9.

For more advice on choosing a video projector, please refer to the dedicated article on video projectors.

LED lights

The lights used to illuminate the tunnels on the sides are Astera Titan Tube on battery.

Responsive audio visual effects softwares

Touchdesigner

Neurotypique and Marin Scart programmed their own composition on Touchdesigner software.

Developed by Derivative, Touchdesigner allows creation of real time visual effects using a nodal programming system. The strength of Touchdesigner is the infinite number of possible combinations, associated with a graphic pipe perfectly adapted for live performances. However, it takes some time to master it to get exactly what you want.

HeavyM

HeavyM is developed by Digital Essence and allows you to create music responsive effects in a few clicks. In the Stone Mine Mapping project, HeavyM was used on two levels. The first is the use of processing effects shaders, on Touchdesigner video outputs. The second is the distortion of the overall image to fit the projection space.

HeavyM directly integrates audio analysis features to give you the ability to make visual effects react to music being played. All you have to do is connect the computer to an audio source with a jack cable for example. You can also use an external sound card.

Communication between the Performance's components

To generate music responsive visuals, the various hardware and software elements must necessarily exchange information.

Modular synthesizers send audio information through a particular module: the ES-9 Expert Sleepers. This is an analog-to-digital converter that converts electrical signals into digital data that can be understood by Touchdesigner. It is not necessary to have this type of hardware because HeavyM directly integrates audio analysis functionalities to give you the possibility to make the visual effects react to the music played. All you have to do is connect the computer to an audio source with a jack cable for example. You can also use an external sound card.

Once the music information is received, Touchdesigner generates the visual animations and sends them to HeavyM with a Spout link. This is a technology that allows you to send the video output of one software to another. HeavyM receives the video and, using a MIDI controller, Marin Scart applies additional visual effects. HeavyM’s output is then sent to another instance of HeavyM on the second computer using NDI technology. This allows a video stream to be delivered over a network with minimal latency. Finally, HeavyM modifies the geometry of the video to send it to the video projector, which displays it in the live performance space.

To complete the video projection, LED lights have been added on the sides. They match the colors of the projection. The control signals for the lights are DMX messages sent over a Wifi network from Touchdesigner.

It's your turn!

Creating music responsive visuals for a live performance requires some technical knowledge that can easily be found on the internet. On the other hand, the necessary hardware becomes more and more accessible, in terms of cost and understanding. The Stone Mine Mapping project might seem complex at first, but it has the advantage of providing an overview of the tools and techniques available. It is possible to consider a much simpler system that is reduced to a single computer and speakers. Many audiovisual performers are satisfied with making manipulations “by ear” according to the music they hear, without necessarily creating an audio-video link. The result can sometimes be more relevant than letting the computer decide by itself.

To be continued

Learn more about projectors

We have created a detailed guide to choosing the right projector for your needs. Discover our tips and mistakes to avoid in this guide that explains the different features step by step.

Explore the HeavyM software

HeavyM is a video mapping software that is capable of generating visual effects and projecting them on volumes, decorations or architectural elements. HeavyM is Mac and Windows compatible.